Collation torture test results are finally finished and uploaded for Debian.

https://github.com/ardentperf/glibc-unicode-sorting

The test did not pick up any changes in en_US sort order for either Bullseye or Bookworm 🎉️

Buster has glibc 2.28 so it shows lots of changes – as expected.

The postgres wiki had claimed that Jessie(8) to Stretch(9) upgrades were safe. This is false if the database contains non-english characters from many scripts (even with en_US locale). I just now tweaked the wording on that wiki page. I don’t think this is new info; I think it’s the same change that showed up in the Ubuntu tables under glibc 2.21 (Ubuntu 15.04)

FYI – the changelist for Stretch(9) does contain some pure ascii words like “3b3” but when you drill down to the diff, you see that it’s only moving a few lines relative to other strings with non-english characters:

@@ -13768521,42 +13768215,40 @@ $$.33

༬B༬

3B༬

3B-༬

-3b3

3B༣

3B-༣

+3B٣

+3B-٣

+3b3

3B3

In the process of adding Debian support to the scripts, I also fixed a few bugs. I’d been running the scripts from a Mac but now I’m running them from a Ubuntu laptop and there were a few minor syntax things that needed updating for running on Linux – even though, ironically, when I first started building these scripts it was on another Linux before I switched to Mac. I also added a file size sanity check, to catch if the sorted string-list file was only partly downloaded from the remote machine running some old OS (… realizing this MAY have wasted about an hour of my evening yesterday …)

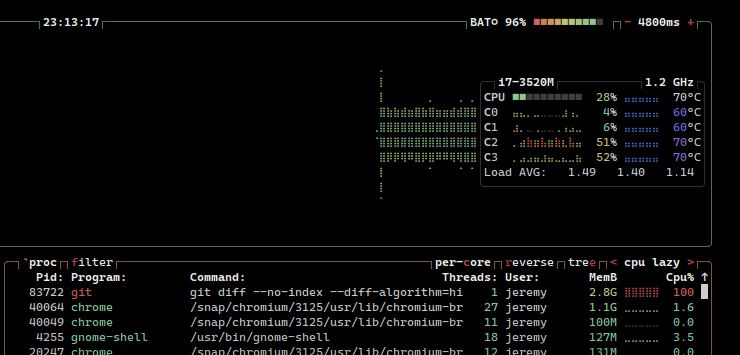

The code that sorts the file on the remote instance is pretty efficient. It does the sort in two stages and the first stage is heavily parallelized to utilize whatever CPU is available. Lately I’ve mostly used c6i.4xlarge instances and I typically only need to run them for 15-20 minutes to get the data and then I terminate them. The diffs and table generation run locally. On my poor old laptop, the diff for buster ran at 100% cpu and 10°C hotter than the idle core for 20+ minutes 😀 and the final table generation took 38 minutes.

Note that the regular/default linux diff executable uses the classic Myers algorithm. This works if the changeset is very small but unfortunately a large changeset produced by this particular test suite will break the algorithm. When it was used to compare sorted lists from glibc 2.27 and glibc 2.28, the diff command ran at 100% CPU for 4 days before I gave up waiting. This was true with default flags and also with “–speed-large-files” flag. An easy way to get access to a different algorithm was to leverage git. It’s “histogram” algorithm completed the glibc 2.27 and 2.28 comparison in about 26 minutes. (Did you know git has multiple diff algorithms you can choose from?) There are a few other interesting tidbits tucked away comments of this github repo too… like careful coding in run.sh to support not only the latest unix operating systems but also stuff as old as bash v3.2.25 and perl v5.8.8

Reviewing the data, the en_US comparisons are accurate and very straightforward. It all starts with the same simple text file – 25 million strings – and it does unix “sort” of that file on the target OS before downloading it. Then we locally do a “diff” between the files. The raw output of the “diff” command is directly uploaded to github so that anyone can see the base data and check that summaries are accurate.

The script also downloads the OS locale data files from /usr/share/i18n/locales and does a recursive diff directly against the downloaded directories. The raw results of this diff are also uploaded to github. I was reviewing summary info in the README.md tables today and there might be a bug in the code that generates the summary? I’m not sure – but the underlying data and raw diff output is straightforward and available.

For anyone who’s interested in learning more about the background of this test suite, you can watch the recording of a presentation that Jeff Davis and I gave at 2024 PGConf.dev titled “Collations from A to Z” (putting words in order without losing your mind or your data).

And for those of you running Postgres on Docker… remember to pin the major version of your base image operating system!

Now if someone will please ping me a year from now to make me feel guilty about still not yet updating the tables that have ICU and RHEL collation changes 😆️

Discussion

No comments yet.